[TOC]

一、环境准备

1.1 硬件设备环境

采用5台腾讯云的CVM作为kubernetes的部署环境,具体信息如下:

| 主机名 | IP | 配置 | 备注 |

|---|---|---|---|

| (Old)VM_0_1_centos;(New)tf-k8s-m1 | 10.0.0.1 | 4c 8g | k8s的master,同时也是etcd节点 |

| (Old)VM_0_2_centos;(New)tf-k8s-m2 | 10.0.0.2 | 4c 8g | k8s的master,同时也是etcd节点 |

| (Old)VM_0_3_centos;(New)tf-k8s-m3 | 10.0.0.3 | 4c 8g | k8s的master,同时也是etcd节点 |

| (Old)VM_0_4_centos;(New)tf-k8s-n1 | 10.0.0.4 | 4c 8g | 工作节点 node,容器编排最终 pod 工作节点 |

| (Old)VM_0_5_centos;(New)tf-k8s-n2 | 10.0.0.5 | 4c 8g | 工作节点 node,容器编排最终 pod 工作节点 |

1.2 软件环境

| 环境 | 简介 |

|---|---|

| 操作系统 | CentOS7 |

| kubeadm | 1.13.3 |

| kubernetes | 1.13.3 |

| Docker | docker-ce 18.06.2 |

1.3 相关系统设置

在正式安装之前,需要在每台机器上对以下配置进行修改:

- 关闭防火墙,selinux

- 关闭系统的swap功能

- 关闭Linux swap空间的swappiness

- 配置L2网桥在转发包时会被iptables的FORWARD规则所过滤,该配置被CNI插件需要,更多信息请参考Network Plugin Requirements

- 升级内核到最新(centos7 默认的内核是3.10.0-862.el7.x86_64 ,可以使用命令‘uname -a’进行查看),原因见请问下为什么要用4.18版本内核

- 开启IPVS

- 修改主机名(如果主机名中含有一些特殊字符,则需要调整主机名,不然在后续操作中会出现错误)

具体的配置修改执行脚本如下:

1 | # ---------- 关闭防火墙和selinux ----------- |

1.3 配置集群内各个机器之间的免密码登录

1.3.1 配置hosts

为了便于后续的操作,我们需要给每一台设备配置下hosts域名信息,具体如下:

1 | # vi /etc/hosts |

1.3.2 新建用户

1 | # useradd kube |

备注:visudo命令是用来给kube用户添加sudo密码

1.3.3 设置免密登录

- 各个设备的root用户&kube用户(不同用户配置不同的)都生成各自的免密登录的ssh的私钥与公钥

1 | ## 为root用户生成ssh的私钥与公钥 |

在/root目录下,会生成一个.ssh目录,.ssh目录下会生成以下三个文件:

1 | -rw------- 1 root root 2398 Feb 13 15:18 authorized_keys |

authorized_keys文件存储了本设备认证授权的其他设备的公钥信息;id_rsa存储了本设备的私钥信息;id_rsa.pub存储了本设备的公钥信息。

1 | ## 为kube用户生成ssh的私钥与公钥 |

在/home/kube目录下,会生成一个.ssh目录,并包含相关文件。

- 各个设备上都创建好各自的ssh免密登录公钥与私钥后,需要将各自的公钥copy至其他的设备上,并将公钥信息添加到各个设备的authorized_keys文件中。

备注:也需要将各个节点自己的公钥copy至自己的authorized_keys文件中,这样自己才可以ssh自己。

1 | ## 将每一台节点上的公钥都同步到相应的目录下 |

测试是否能够正常使用ssh免密登录

1 | ssh root@tf-k8s-m1 |

提示:如果其他机器上的 root 下的 /root/.ssh/authorized_keys 不存在,可以手动创建。要注意的是:authorized_keys 的权限需要是 600。

1 | ## 如果 authorized_keys 的权限不是 600,执行修改权限的命令。 |

二、安装步骤

以下操作,可以都切换至kube用户下进行操作。

2.1 安装docker

由于kubeadm的ha模式对docker的版本是有一定的要求的,因此,本教程中安装官方推荐的docker版本。

1 | # 安装依赖包 |

为了方便操作,我们在tf-k8s-m1节点上,创建一个批量部署docker的脚本。

1 | ## 创建install.docker.sh |

执行install.docker.sh脚本

1 | chmod a+x install.docker.sh |

2.2 安装kubernetes yum源和kubelet、kubeadm、kubectl组件

2.2.1 所有机器上配置 kubernetes.repo yum 源

详细的安装脚本如下:

1 | ## 创建脚本:install.k8s.repo.sh |

执行install.k8s.repo.sh脚本

1 | chmod a+x install.k8s.repo.sh |

2.2.2 所有机器上安装 kubelet、kubeadm、kubectl组件

详细安装脚本如下:

1 | ## 创建脚本:install.k8s.basic.sh |

执行install.k8s.baisc.sh脚本

1 | chmod a+x install.k8s.basic.sh |

2.3 初始化kubeadm配置文件

创建三台master机器tf-k8s-m1,tf-k8s-m2,tf-k8s-m3的kubeadm配置文件,其中主要是配置生成证书的域配置、etcd集群配置。

1 | ## 创建脚本:init.kubeadm.config.sh |

执行init.kubeadm.config.sh脚本

1 | chmod a+x init.kubeadm.config.sh |

执行成功之后,可以在tf-k8s-m1, tf-k8s-m2, tf-k8s-m3的 kube 用户的 home 目录(/home/kube)能看到对应的 kubeadm-config.tf-k8s-m1*.yaml 配置文件。 这个配置文件主要是用于后续初始化集群其他 master 的证书、 etcd 配置、kubelet 配置、kube-apiserver配置、kube-controller-manager 配置等。

各个master节点上对应的kubeadm配置文件:

1 | cvm tf-k8s-m1:kubeadm-config.tf-k8s-m1.yaml |

2.4 安装master镜像和执行kubeadm初始化

2.4.1 拉取镜像到本地

因为 k8s.gcr.io 国内无法访问,我们可以选择通过阿里云的镜像仓库(kubeadm-config.tf-k8s-m1*.yaml 配置文件中已经指定使用阿里云镜像仓库 registry.aliyuncs.com/google_containers),将所需的镜像 pull 到本地。

我们可以通过以下命令,来查看是否已经成功指定了阿里云的镜像仓库,在tf-k8s-m1机器上,通过kubeadm config images list命令来查看,结果如下:

1 | [kube@tf-k8s-m1 ~]$ kubeadm config images list --config kubeadm-config.tf-k8s-m1.yaml |

接下来,分别在tf-k8s-m1、tf-k8s-m2、tf-k8s-m3机器上,拉取相关镜像

1 | [kube@tf-k8s-m1 ~]$ sudo kubeadm config images pull --config kubeadm-config.tf-k8s-m1.yaml |

执行成功后,应该能够看到本地已经拉取的镜像

1 | [kube@tf-k8s-m1 ~]$ sudo docker images |

2.4.2 安装master tf-k8s-m1

我们目标是要搭建一个高可用的 master 集群,所以需要在三台 master tf-k8s-m1 tf-k8s-m2 tf-k8s-m3机器上分别通过 kubeadm 进行初始化。

由于 tf-k8s-m2 和 tf-k8s-m3 的初始化需要依赖 tf-k8s-m1 初始化成功后所生成的证书文件,所以这里需要先在 m01 初始化。

1 | [kube@tf-k8s-m1 ~]$ sudo kubeadm init --config kubeadm-config.tf-k8s-m1.yaml |

初始化成功后,会看到如下日志:

备注:如果初始化失败,则可以通过kubeadm reset --force命令重置之前kubeadm init命令的执行结果,恢复一个干净的环境

1 | [init] Using Kubernetes version: v1.13.3 |

至此,就完成了第一台master的初始化工作。

2.4.3 kube用户配置

为了让tf-k8s-m1的 kube 用户能通过 kubectl 管理集群,接着我们需要给tf-k8s-m1 的 kube 用户配置管理集群的配置。在tf-k8s-m1机器上创建config.using.cluster.sh脚本,具体如下:

1 | ## 创建脚本:config.using.cluster.sh |

执行config.using.cluster.sh脚本

1 | chmod a+x config.using.cluster.sh |

验证结果,通过kubectl命令查看集群状态,结果如下:

1 | [kube@tf-k8s-m1 ~]$ kubectl cluster-info |

查看集群所有的pods信息,结果如下:

1 | [kube@tf-k8s-m1 ~]$ kubectl get pods --all-namespaces |

其中,由于未安装相关的网络组件,eg:flannel,所有coredn还是显示为pending,暂时没有影响。

2.4.4 安装CNI插件flannel

备注:所有的节点都需要安装

具体的安装脚本如下:

1 | ## 拉取镜像 |

安装成功之后,通过 kubectl get pods --all-namespaces,看到所有 Pod 都正常了.

2.5 安装剩余的master

2.5.1 同步tf-k8s-m1的ca证书

首先,将 tf-k8s-m1 中的 ca 证书,scp 到其他 master 机器(tf-k8s-m2 tf-k8s-m3)。

为了方便,这里也是通过脚本来执行,具体如下:

注意:需要确认 tf-k8s-m1 上的 root 账号可以免密登录到 tf-k8s-m2 和 tf-k8s-m3 的 root 账号。

1 | ## 创建脚本:sync.master.ca.sh |

执行脚本,将 tf-k8s-m1 相关的 ca 文件传到tf-k8s-m2 和 tf-k8s-m3:

1 | chmod +x sync.master.ca.sh |

2.5.2 安装master tf-k8s-m2

总共分为四个步骤,分别是:总1. 共分为四个步骤,分别是:

- 配置证书、初始化 kubelet 配置和启动 kubelet

1 | [kube@tf-k8s-m2 ~]$ sudo kubeadm init phase certs all --config kubeadm-config.tf-k8s-m2.yaml |

- 将etcd加入集群

1 | [kube@tf-k8s-m2 root]$ kubectl exec -n kube-system etcd-tf-k8s-m1 -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://10.0.0.1:2379 member add tf-k8s-m2 https://10.0.0.2:2380 |

启动kube-apiserver、kube-controller-manager、kube-scheduler

1 | [kube@tf-k8s-m2 ~]$ sudo kubeadm init phase kubeconfig all --config kubeadm-config.m02.yaml |

将节点标记为master节点

1 | [kube@tf-k8s-m2 ~]$ sudo kubeadm init phase mark-control-plane --config kubeadm-config.m02.yaml |

2.5.3 安装master tf-k8s-m3

安装过程和安装master tf-k8s-m2是一样的,区别在于使用的kubeadm配置文件为kubeadm-config.tf-k8s-m3.yaml以及etcd加入成员时指定的实例地址不一样。

完整的流程如下:

1 | # 1. 配置证书、初始化 kubelet 配置和启动 kubelet |

2.5.4 验证三个master节点

至此,三个 master 节点安装完成,通过 kubectl get pods –all-namespaces 查看当前集群所有 Pod。

1 | [kube@tf-k8s-m2 ~]$ kubectl get pods --all-namespaces |

2.5.5 加入工作节点

这步很简单,只需要在工作节点 tf-k8s-n1 和 tf-k8s-n2 上执行加入集群的命令即可。

可以使用上面安装 master tf-k8s-m1 成功后打印的命令 kubeadm join api.tf-k8s.xiangwushuo.com:6443 –token a1t7c1.mzltpc72dc3wzj9y –discovery-token-ca-cert-hash sha256:05f44b111174613055975f012fc11fe09bdcd746bd7b3c8d99060c52619f8738,也可以重新生成 Token。

这里演示如何重新生成 Token 和 证书 hash,在 tf-k8s-m1 上执行以下操作:

1 | # 1. 创建 token |

然后使用kubeadm join,分别在工作节点tf-k8s-n1与tf-k8s-n2上执行,将节点加入

集群,如下:

1 | sudo kubeadm join api.tf-k8s.xiangwushuo.com:6443 --token gz1v4w.sulpuxkqtnyci92f --discovery-token-ca-cert-hash sha256:b125cd0c80462353d8fa3e4f5034f1e1a1e3cc9bade32acfb235daa867c60f61 |

在 tf-k8s-m1 上通过 kubectl get nodes 查看,将看到节点已被加进来(节点刚加进来时,状态可能会是 NotReady,稍等一会就回变成 Ready)。

2.6 部署高可用CoreDNS

默认安装的 CoreDNS 存在单点问题。在 m01 上通过 kubectl get pods -n kube-system -owide 查看当前集群 CoreDNS Pod 分布(如下)。

从列表中,可以看到 CoreDNS 的两个 Pod 都在 m01 上,存在单点问题。

1 | [kube@tf-k8s-m1 ~]$ kubectl get pods -n kube-system -owide |

首先删除CoreDNS的deploy,然后创建新的CoreDNS-HA.yaml配置文件,如下

1 | apiVersion: apps/v1 |

部署新的CoreDNS

1 | kubectl apply -f CoreDNS-HA.yaml |

2.7 部署监控组件metrics-server

kubernetesv1.11 以后不再支持通过 heaspter 采集监控数据。使用新的监控数据采集组件metrics-server。 metrics-server 比 heaspter 轻量很多,也不做数据的持久化存储,提供实时的监控数据查询。

先将所有文件下载,保存在一个文件夹 metrics-server 里。

修改 metrics-server-deployment.yaml 两处地方,分别是:apiVersion 和 image,最终修改后的 metrics-server-deployment.yaml 如下:

1 | --- |

进入刚创建的 metrics-server,执行 kubectl apply -f . 进行部署(注意 -f 后面有个点),如下:

1 | [kube@tf-k8s-m1 metrics-server]$ kubectl apply -f . |

运行kubectl get pods -n kube-system,确定metrics-server的pods是否正常running。

2.8 部署Nginx-ingress-controller

Nginx-ingress-controller 是 kubernetes 官方提供的集成了 Ingress-controller 和 Nginx 的一个 docker 镜像。

本次部署中,将 Nginx-ingress 部署到 tf-k8s-m1、tf-k8s-m2、tf-k8s-m3上,监听宿主机的 80 端口。

创建 nginx-ingress.yaml 文件,内容如下:

1 | apiVersion: v1 |

部署 nginx ingress,执行命令 kubectl apply -f nginx-ingress.yaml

2.9 部署kubernetes-dashboard

2.9.1 Dashboard 配置

新建部署 dashboard 的资源配置文件:kubernetes-dashboard.yaml,内容如下:

1 | apiVersion: v1 |

执行部署 kubernetes-dashboard,命令 kubectl apply -f kubernetes-dashboard.yaml.

在本地笔记本电脑上访问dashboard的时候,需要将dashboard.tf-k8s.xiangwushuo.com域名解析到三台master的IP(配置代理),简单地,可以直接在本地/etc/hosts添加

1 | ## 172.66.23.13 为tf-k8s-m1的外网IP |

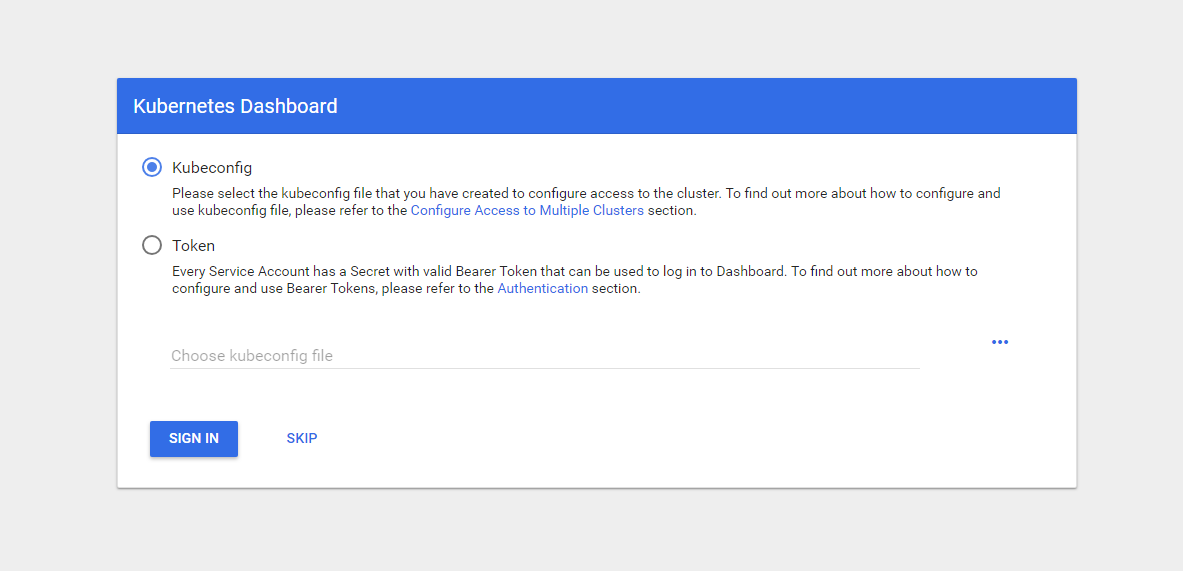

从浏览器访问: http://dashboard.tf-k8s.xiangwushuo.com

2.9.2 HTTPS 访问 Dashboard

由于通过 HTTP 访问 dashboard 会无法登录进去 dashboard 的问题,所以这里我们将 dashboard 的服务配置成 HTTPS 进行访问。

总共三步:

签证书(或者使用权威的证书机构颁发的证书)

1 | openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout ./tf-k8s.xiangwushuo.com.key -out ./tf-k8s.xiangwushuo.com.crt -subj "/CN=*.xiangwushuo.com" |

创建 k8s Secret 资源

1 | kubectl -n kube-system create secret tls secret-ca-tf-k8s-xiangwushuo-com --key ./tf-k8s.xiangwushuo.com.key --cert tf-k8s.xiangwushuo.com.crt |

配置 dashboard 的 ingress 为 HTTPS 访问服务,修改 kubernetes-dashboard.yaml,将其中的 Ingress 配置改为支持 HTTPS,具体配置如下:

1 | ...省略... |

使用 kubectl apply -f kubernetes-dashboard.yaml 让配置生效。

2.9.3 .3 登录 Dashboard

登录 dashboard 需要做几个事情(不用担心,一个脚本搞定):

新建 sa 的账号(也叫 serviceaccount)

集群角色绑定(将第 1 步新建的账号,绑定到 cluster-admin 这个角色上)

查看 Token 以及 Token 中的 secrect (secrect 中的 token 字段就是来登录的)

执行以下脚本,获得登录的 Token:

1 |

|

复制 Token 去登录就行,Token 样例:

1 |

|

3. 参考文献

1. kubeadm 1.13 安装高可用 kubernetes v1.13.1 集群

2. 如何在CentOS 7上修改主机名

3. Linux之ssh免密登录

4. sudo与visudo的超细用法说明

5. kubeadm HA master(v1.13.0)离线包 + 自动化脚本 + 常用插件 For Centos/Fedora

6. github.coreos.flannel

7. Kubernetes Handbook——Kubernetes中文指南/云原生应用架构实践手册